As AI becomes more embedded in the research and design process, our role as designers is shifting from doing all the work to evaluating the quality of what AI produces. Insight evaluation is about learning to see the signals of quality in its outputs — when an insight is grounded and useful, and when it’s vague, biased, or simply wrong.

Why Evaluation Matters

AI can generate ideas and insights quickly, but speed isn’t the same as rigor. Without consistently evaluating AI outputs, we risk building strategies and products on shaky foundations: insights that sound smart but collapse under scrutiny.

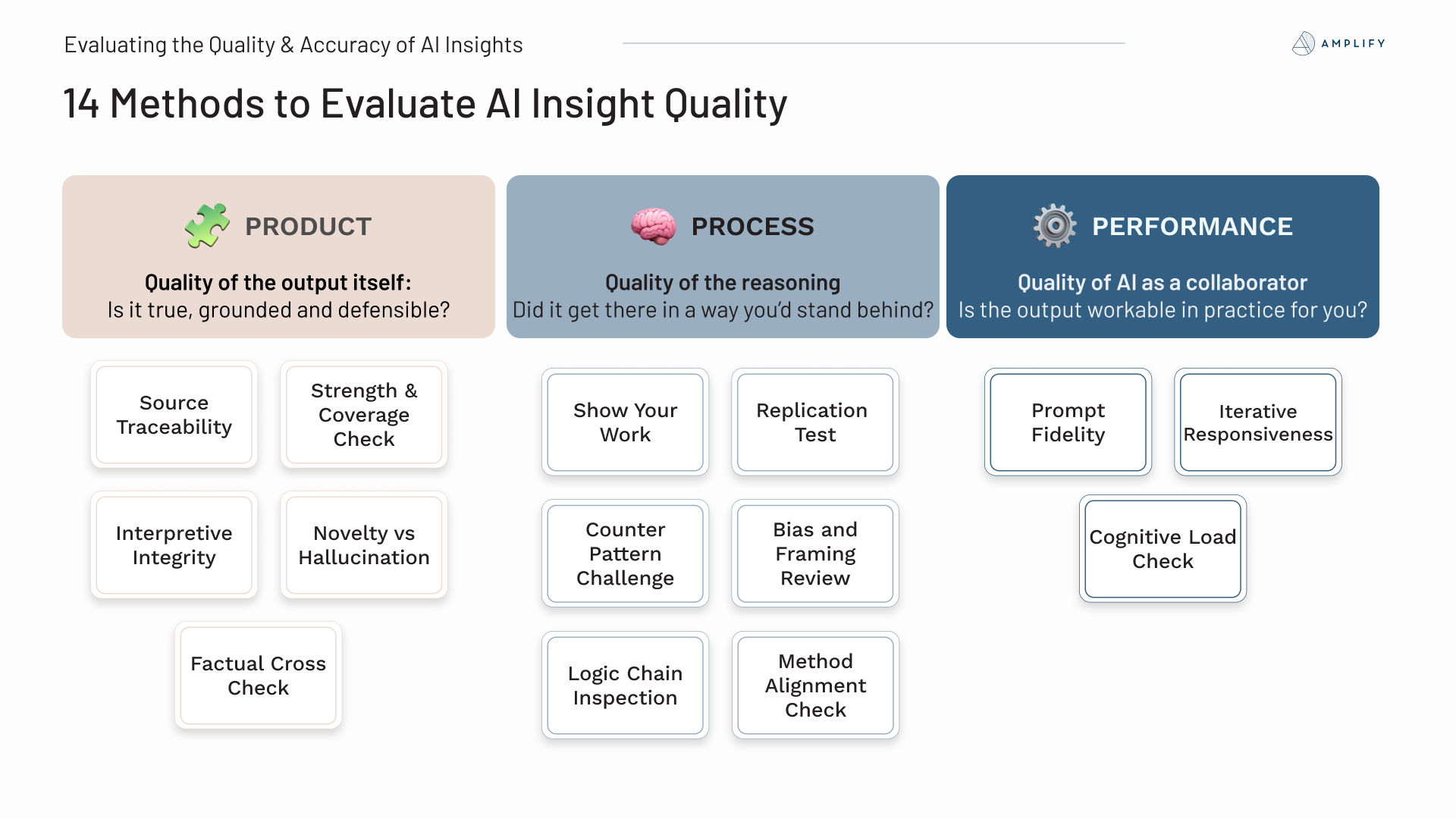

Three Dimensions of Discernment

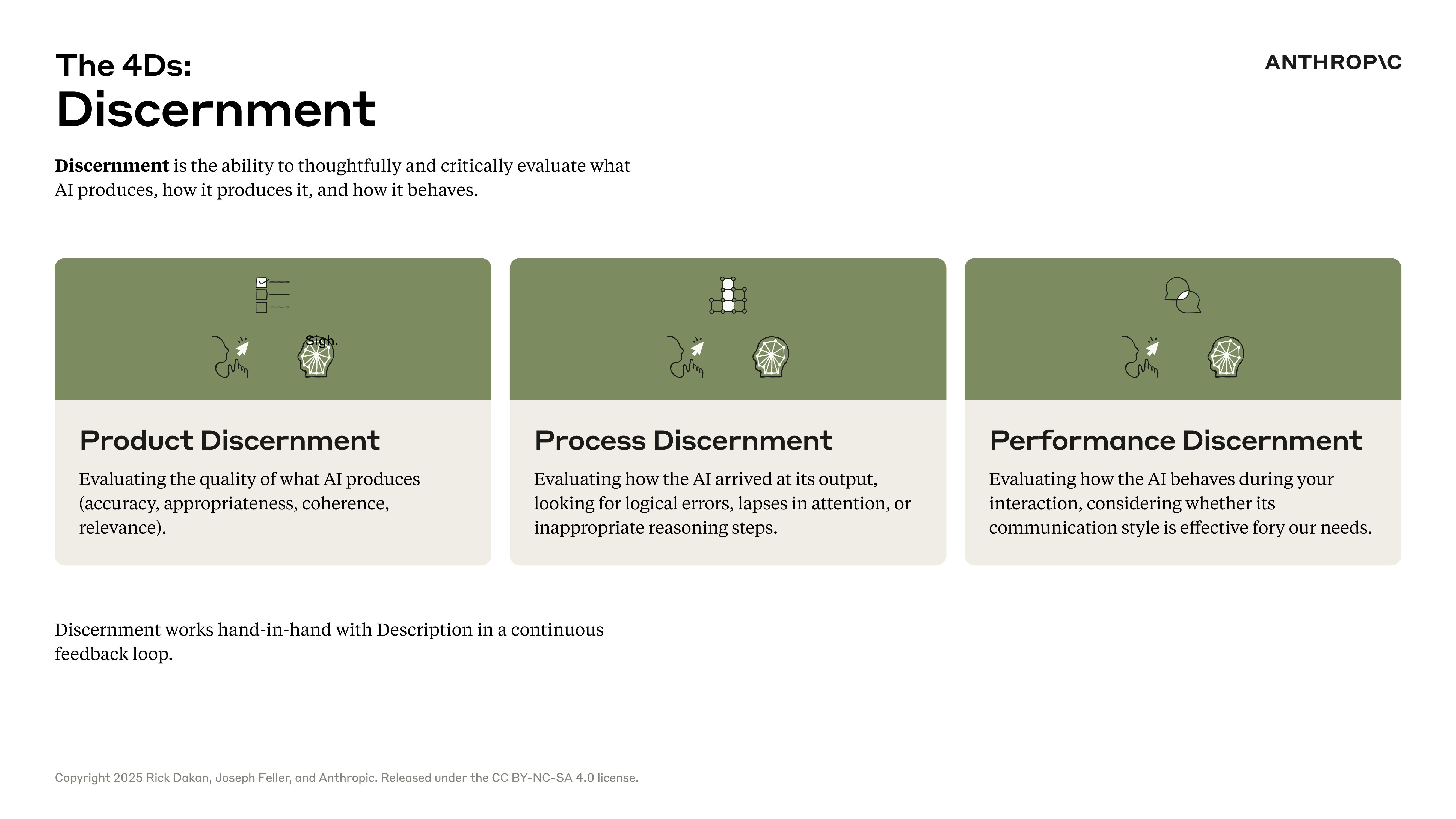

The Anthropic 4D framework breaks "discernment" into three dimensions. Together, they help you evaluate both what AI produces and how it got there. AI can sound confident while being completely wrong, and this process helps you to see the difference.

Practicing Discernment

Discernment is a muscle you build through constant comparison, questioning, and correction. We have to develop this as a reflex response to what AI gives us. In this week's exercise you'll practice using lots of different methods that:

- Compare across tools. Run the same prompt in multiple AIs (e.g., Claude, ChatGPT, Gemini) and see where they differ. Patterns reveal strengths and blind spots.

- Ask for reasoning. Prompt AI to “show its steps.” Transparency helps you spot gaps in logic or data.

- Trace it back to evidence. Return to the original source (like interview transcripts). Does the AI’s claim appear there? Or did it invent connections?

- Look for empathy and depth. Does it capture nuance, or just summarize? Does it understand the “why,” not just the “what”?

Discernment isn’t about catching AI mistakes, it’s about understanding its assumptions and where to correct them.

When You Notice Errors

When you find weak reasoning or hallucinations, don’t discard the AI’s work! Use it as feedback material.

Ask:

- “Where did you get that insight?”

- “Show me which part of the data supports this claim.”

- “What would this look like from another participant’s point of view?”

This is an important part of the iterative process of augmentation — where AI learns from your feedback and you learn how to steer it better next time.

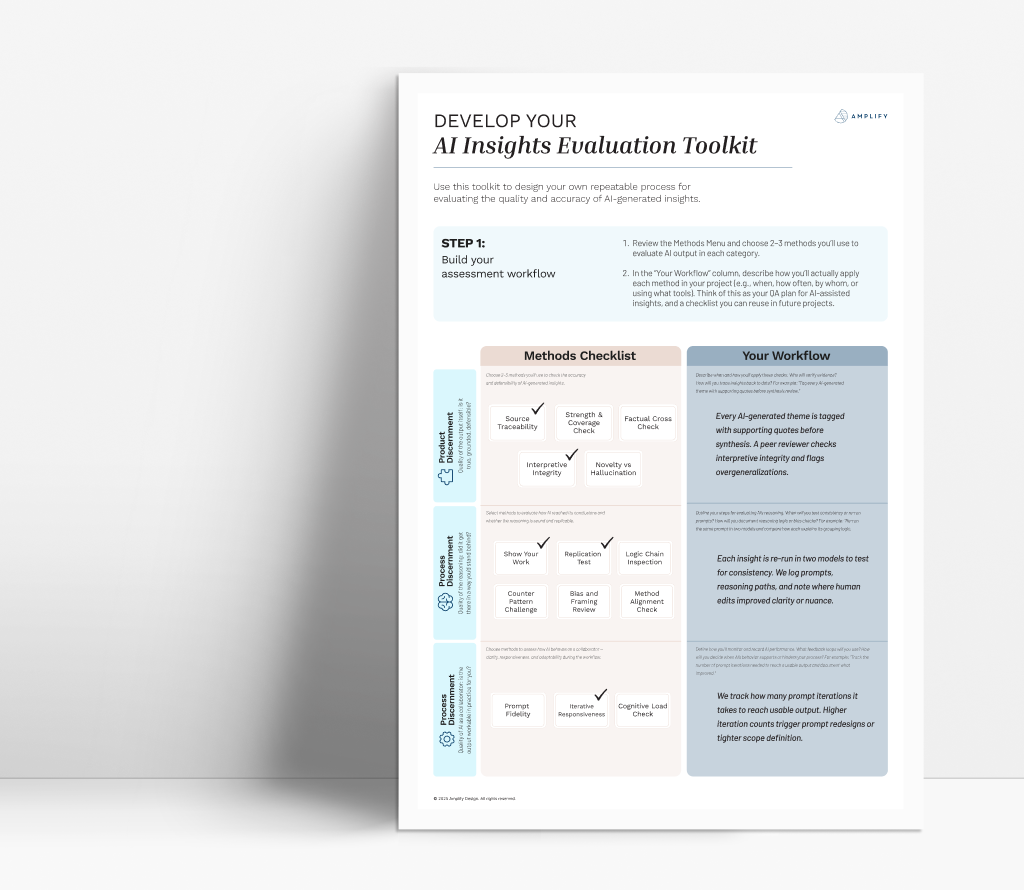

Download the AI Insights Evaluation Toolkit

We've developed a toolkit of 14 discernment methods to evaluate the product, process and performance of AI insights, plus a template for you to think through how to operationalize an evaluation process in your work and team.

👉🏽 Download the toolkit here.