ZeroWidth and Amplify recently collaborated on a mixed-methods research and strategy project that deeply integrated ChatGPT-03 & 03 Pro with (human) design researchers.

Here’s what we learned, and what we’ll be careful to do (and not do) on projects when a large language model is a part of the research team.

TL;DR:

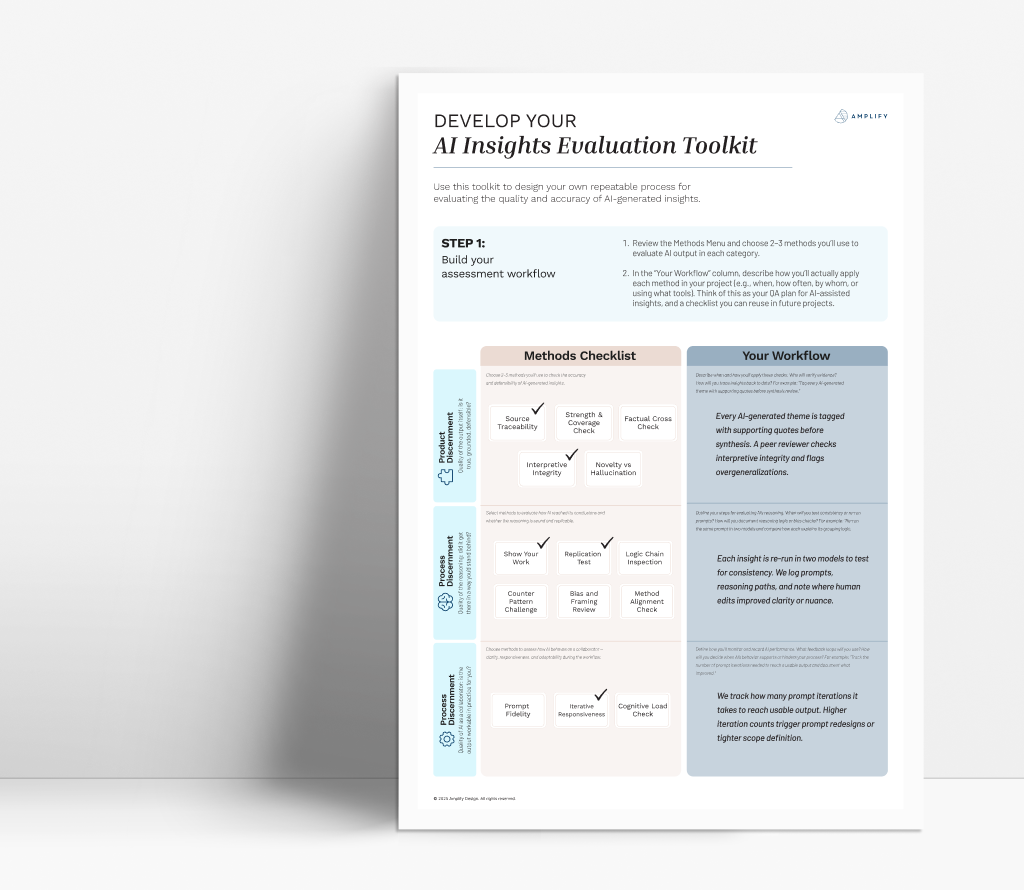

We should all be using LLMs to improve our work, but we should trust their outputs less. Researchers and strategists have to practice constantly interrogating and validating LLM-generated insights—as well as using LLMs to stress test our own work.

The big surprise was how that curiosity and questioning dynamic (is that really true or did you make it up? That’s cool, can you take that further?) forced us to revisit our own practices.

What happens when the model’s analysis differs from your own - is the model wrong, or are you both right?

4 real-world rules for using ChatGPT in design research

1. Co-pilot, not autopilot

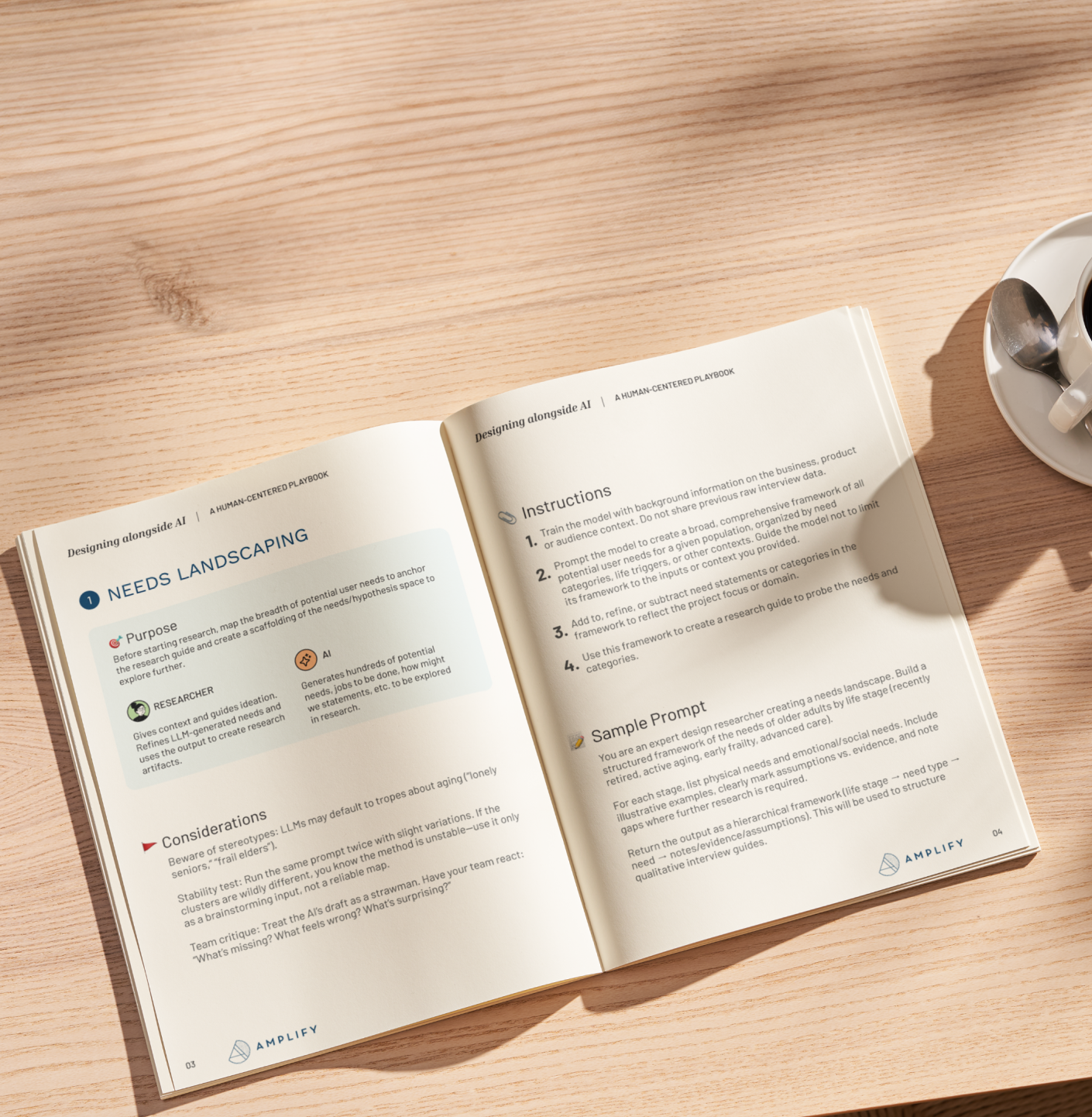

Every stage of a research and strategy project – e.g., cleaning data, drafting personas, synthesizing needs, extracting opportunities – should human judgment and AI refinement.

For example, in this project: GPT clustered intial themes → we sanity-checked, tweaked, and pushed it further. We fed our qual insights into GPT’s quant analysis → GPT built on our frameworks and found new sub-patterns and need clusters.

- Tip: Start every synthesis prompt with “Here’s what I’m thinking so far…” Sharing your half-baked logic frames the model’s job: add, challenge, or refine—not reinvent. You’ll get sharper follow-ups (and fewer hallucinations) because ChatGPT is reacting to your reasoning instead of guessing what you want.

2. Trust AND verify

LLMs have blind spots - technical issues, data gaps, and inherent biases in what it chooses to value in analysis. One early run quietly ignored 3 of our 12 interviews—the only giveaway was that those voices never showed up in the quotes. (good thing we noticed!)

- Tip: Spell out the exact files to reference (“use transcripts 1-12, exclude ‘pilot.docx’”) and ask the model to list what it used before analysing.

3. Capture the receipts

Nothing is scarier than not being able to reproduce the analysis that drives your strategy. Capturing supporting data is not just insurance against forgetting, but are also explicit artifacts of design decisions—making visible the assumptions and rationale often left hidden. There's a kind of invisible 'scaffolding' of design that AI demands we make more explicit to build trust in it.

- Tip: Paste critical tables and quotes into a shared doc the moment they pop out, then tell GPT: “This is the source of truth—build on it, don’t reinvent it.”

- Tip: Keep a paper trail of supporting documentation. Prompt GPT for persona criteria, model logic, supporting quotes, etc for every important output. Sounds dull, but when a client asks “Where did this insight come from?” you’ll have the breadcrumbs.

4. Put bias checks on the calendar

Before celebrating any shiny insight, ask GPT to critique itself: “Whose voice is missing? What might be skewed?” The answers weren’t perfect, but they flagged gaps we could fill with fresh recruiting. Kudos to the model for being self aware! (Sometimes)

- Tip: Block 30 minutes on the team calendar for a bias check, right after your first insight pass.